You can read this as RSS feed.

Dear Users,

During this week, we will enable encryption for interactive jobs. This step is essential to enhance the security of data transmission and communication within the MetaCentrum computing environment.

For you as users, this update brings two practical changes that we would like to bring to your attention:

The system will now strictly require a valid Kerberos ticket.

If you do not have a valid Kerberos ticket at the moment the interactive job starts, the system will prompt you to renew it (enter password / kinit) when you attempt to connect to the job.

If your ticket is valid, the login process will proceed as usual without further interaction.

We are introducing a security time limit for starting your work.

Once the interactive job starts on the cluster (a compute node is allocated), you have 3 hours to start interacting with the job.

If you do not connect to the running job within 3 hours, it will be automatically cancelled.

These measures help us maintain a secure and efficiently utilized infrastructure. Thank you for your understanding.

In case of any issues, please contact user support.

The MetaCentrum Team

Are you computing with us? We want to hear from you.

To insure our computing and cloud services continue to meet the demands of your research, we need your input. Your feedback is crucial for our strategic planning. It helps us identify exactly which aspects of our infrastructure—from job scheduling to storage availability—need improvement or expansion.

If you have already completed the survey, thank you very much for your feedback.

We are pleased to announce the successful integration of new computing clusters owned by CESNET and the University of West Bohemia (ZČU) into the MetaCentrum infrastructure. This expansion brings increased capacity for both CPU and GPU calculations, as well as a specialized SMP node with large shared memory.

Below are the technical specifications of the new machines.

This cluster replaces the original cluster of the same name. It is designed for demanding CPU calculations and does not contain graphics accelerators. The cluster is already available in standard scheduler queues.

Owner: CESNET

Adress: adan[1-48].grid.cesnet.cz

Total capacity: 48 nodes / 6 144 CPU cores

Node configuration:

CPU: 2x AMD EPYC 9554 64-Core Processor

RAM: 768 GiB

Disk: 2x 3.84 TB NVMe

Net: 25 Gbit/s

Node performance: SPECrate 2017_fp_base: 1360

The alfrid[1-9].meta.zcu.cz cluster underwent significant modernization and expansion in two phases (May and December 2025). It replaces the original hardware and now offers 8 GPU nodes and one SMP node.

a. GPU nodes (alfrid (4 nodes) a alfrid-II (4nodes)) These nodes are equipped with NVIDIA L40 and L40S accelerators, suitable for accelerated calculations and AI tasks.

Owner: ZČU Plzeň

Total capacity: 8 nodes / 1 024 CPU cores

Node configuration:

CPU: 2x AMD EPYC 9554 64-Core Processor

RAM: 1 536 GiB (alfrid) / 768 GiB (alfrid-II)

GPU: 2x NVIDIA L40 48GB (alfrid) / 4x NVIDIA L40S 48GB (alfrid-II)

Disk: 2x 7 TB NVMe

Net: 25 Gbit/s

Node performance: SPECrate 2017_fp_base: 1230

b. SMP node (alfrid-smp) A specialized node designed for tasks requiring a large amount of shared memory.

Owner: ZČU Plzeň

Adress: alfrid-smp.meta.zcu.cz

Capacity: 1 uzel / 128 jader CPU

Node configuration:

CPU: 2x AMD EPYC 9554 64-Core Processor

AM: 4 608 GiB (přibližně 4,5 TB)

Disk: 2x 7 TB NVMe

Net: 10 Gbit/s

Node performance: SPECrate 2017_fp_base: 1200

A complete list of available computing servers and their current utilization can be found here: MetaCentrum Hardware

We believe this new hardware will contribute to the effective implementation of your calculations and scientific projects.

|

||||||

|

||||||

|

On Thursday, October 2, 2025, the MetaCentrum 2025 High-Performance Computing Seminar took place at the Lávka Club in Prague, with more than 90 participants attending in person and another 40 joining online.

The program focused on data processing and storage, security, working with containers and the cloud, as well as the use of AI models on the MetaCentrum and CERIT-SC infrastructure. Experiences were shared not only by experts from CESNET and CERIT-SC, but also by users from research groups at Masaryk University and Charles University.

The seminar presentations are available on the event page. The same link will also host a video recording of the seminar once it has been edited.

Dear users,

https://events.it4i.cz/event/354/

|

||||||

|

Dear users,

We cordially invite you to the MetaCentrum 2025 Seminar, taking place on Thursday, October 2, 2025, at Novotného lávka in Prague with a stunning view of Charles Bridge and the city center.

This seminar will focus on data processing, analysis, and storage, as well as introducing the latest developments in grid, cloud, and Kubernetes environments at MetaCentrum and CERIT-SC.

Program and more information:

https://metavo.metacentrum.cz/en/seminars/Seminar2025/index.html

Venue:

Lávka, Novotného lávka 201/1

110 00 Prague 1 – Staré Město

http://lavka.cz/

We look forward to seeing you there!

We are pleased to announce that a new computing cluster fobos.meta.zcu.cz has been successfully integrated into the MetaCentrum infrastructure.

The cluster is available in regular queues.

A complete list of available computing servers can be found here: https://metavo.metacentrum.cz/pbsmon2/hardware

We believe that this new hardware will contribute to more efficient execution of your computations and scientific projects!

We're pleased to announce the availability of a new fast shared scratch using the parallel distributed file system BeeGFS on our bee.cerit-sc.cz cluster. This new resource, available as scratch_shared, is specifically designed for high-performance computing (HPC) needs and offers several advantages for data-intensive and compute-intensive applications.

BeeGFS is ideal for demanding jobs that require:

We are pleased to announce that a new computing clusters elbi1.hw.elixir-czech.cz, elmu1.hw.elixir-czech.cz, eluo1.hw.elixir-czech.cz, elum1.hw.elixir-czech.cz operated by ELIXIR project, has been successfully integrated into the MetaCentre infrastructure. The clusters have very similar configurations and are located in different locations.

|

elmu1.hw.elixir-czech.cz (2400 CPU, 25 uzlů ) - Cluster výpočetních strojů (MUNI Brno)

|

eluo1.hw.elixir-czech.cz (576 CPU, 6 uzlů ) - Cluster výpočetních strojů (UOCHB Praha)

|

elum1.hw.elixir-czech.cz (96 CPU, 1 uzel ) - Cluster výpočetních strojů (UMG Praha)

|

The cluster is available in the ELIXIR’s priority queues. The elbi1 cluster has 2 GPU cards and is also accessible in gpu queue. Other users can use the cluster in short regular queues with a limit of 24 hours.

A complete list of available computing servers can be found here: https://metavo.metacentrum.cz/pbsmon2/hardware

We believe that this new hardware will contribute to more efficient execution of your computations and scientific projects!

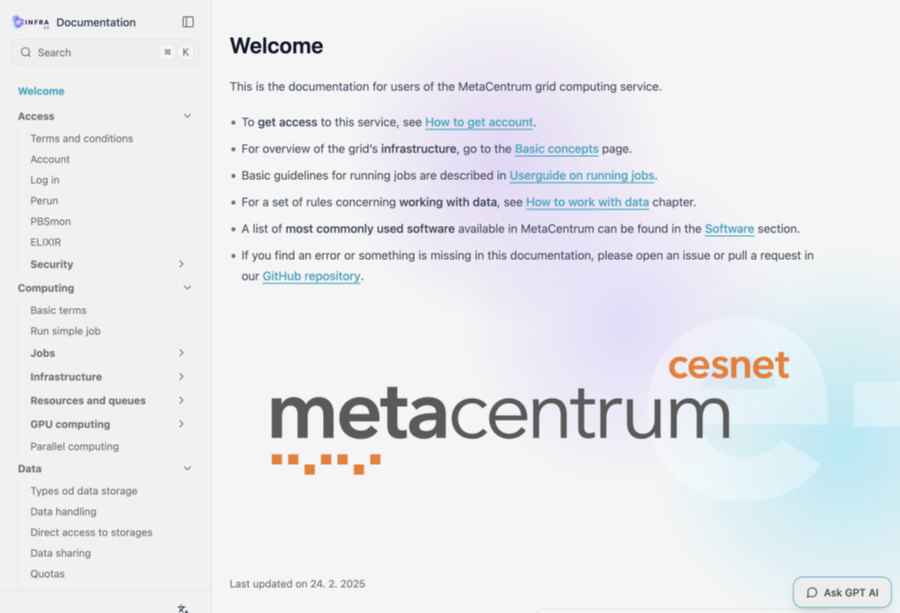

Will be available soon! Our existing documentation https://docs.metacentrum.cz/ has undergone a visual upgrade.

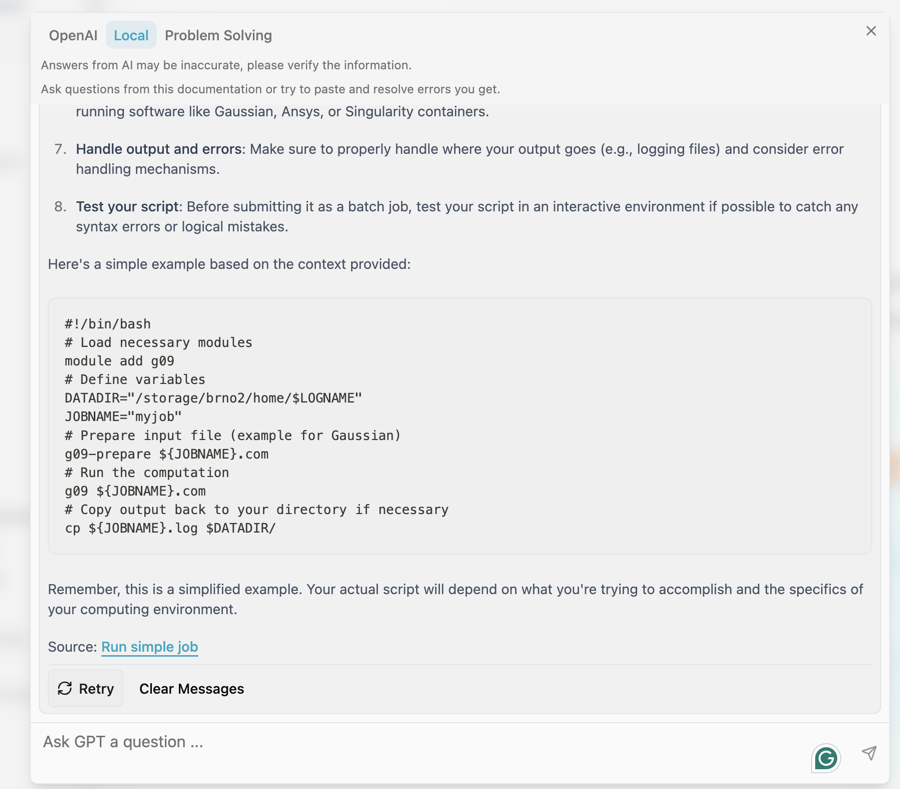

While its structure remains unchanged, we've converted it to a new technology that supports AI chat integration (https://docs.metacentrum.cz/en/docs/tutorials/chat-help) to help you quickly find answers.

AI chat enables interactive search for information contained in the documentation. Select Local if you want a response based on the content of the documentation. The Problem Solving section solves the most common problems you may encounter. We will expand both sections on frequently asked questions.

We will be glad to receive your feedback. We will continuously improve the documentation based on your questions and comments.

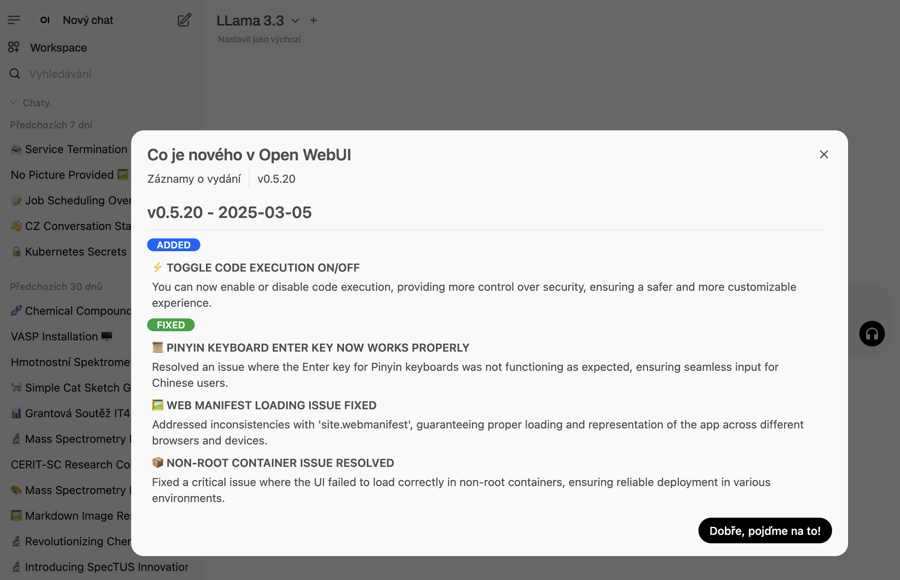

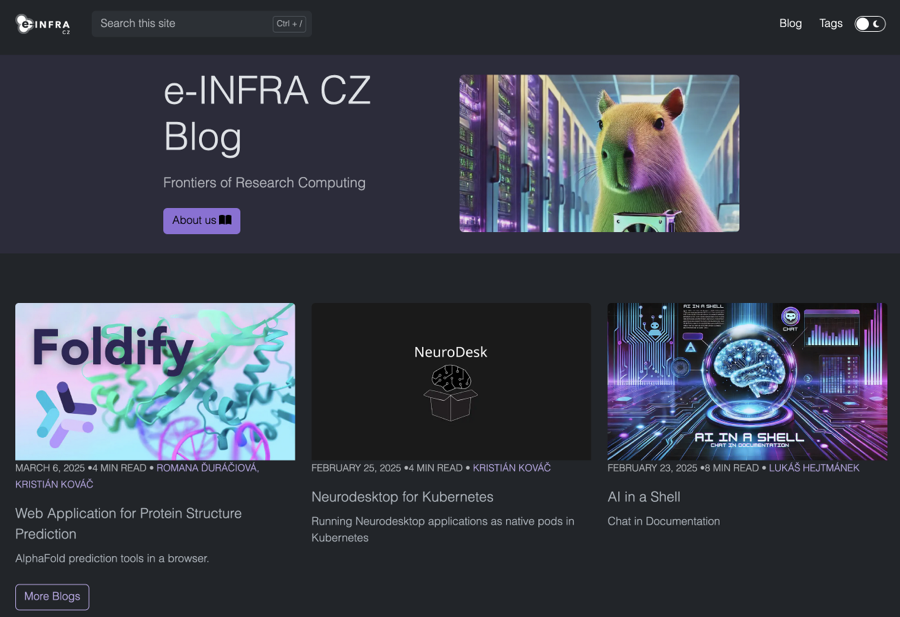

At the same time, we have launched a separate AI chat available at https://chat.ai.e-infra.cz/. There are several models available to try out, it supports drawing pictures and reading attached documents. The models run locally on our computing resources, so you don't have to worry about data leakage outside our infrastructure.

All models offered are also available via API and can be used for your projects. Documentation is available at

https://docs.cerit.io/en/docs/web-apps/chat-ai

You can find interesting facts about the chat and other topics related to infrastructure in our blog https://blog.e-infra.cz/

Dear Users,

This competition is an excellent opportunity to gain priority access to support your upcoming projects. More detailed information can be found in the attached invitation.

|

|||||||||||||||||||

|

We would like to inform users about a change in access to the NVIDIA DGX H100 80GB (capy.cerit-sc.cz) computing system.

From now on, access will only be granted based on an approved request for computing time. The criteria and application process can be found here: Link to documentation.

If your computing requirements do not meet the specified criteria but you still need a GPU with large memory, you can use the GPU cluster bee (bee.cerit-sc.cz) with NVIDIA 2×H100 94GB. More information about this cluster can be found in the previous news post: Link to news.

Yours MetaCentrum

We are pleased to announce that a new computing cluster farin.grid.cesnet.cz, operated by the Faculty of Civil Engineering at CTU in Prague, has been successfully integrated into the MetaCentrum infrastructure.

The cluster is available in the owner’s priority queue cvut@pbs-m1.metacentrum.cz. Other users can access the cluster in short regular queues with a time limit of up to 24 hours. Students and employees of the Faculty of Civil Engineering at CTU can request access to the priority queue.

A complete list of available computing servers can be found here: https://metavo.metacentrum.cz/pbsmon2/hardware

We believe that this new hardware will contribute to more efficient execution of your computations and scientific projects!

The migration of projects running in the e-INFRA CZ / Metacentrum OpenStack cloud Brno G1 [1] to the new environment Brno G2 [2], which took place during 2024, is approaching its final stage.

Migration of personal projects [3] will be possible from February 2025 and can be done by oneself.

The migration procedure will be updated during January 2025 on the website [2], [4].

We will keep you informed in more detail about the procedure and news on the homepage of G2 e-INFRA CZ / Metacenter OpenStack cloud [2].

Thank you for your understanding.

e-INFRA CZ / Metacentrum OpenStack cloud team

[1] https://cloud.metacentrum.cz/

[2] https://brno.openstack.cloud.e-infra.cz/

[3] https://docs.e-infra.cz/compute/openstack/technical-reference/brno-g1-site/get-access/#personal-project

[4] https://docs.e-infra.cz/compute/openstack/migration-to-g2-openstack-cloud/#may-i-perform-my-workload-migration-on-my-own

Dear MetaCentrum Users,

We are pleased to announce several updates that will enhance your computing capabilities within our center. We look forward to helping you streamline your projects with state-of-the-art software and new services.

MetaCentrum now offers new commercial licenses for **MolPro** and **Turbomole**, which are designed for quantum chemistry calculations. These tools enable users to perform detailed simulations and analyses of molecular systems with higher accuracy and efficiency.

For more details on all software options available at MetaCentrum, please visit the following link: https://docs.metacentrum.cz/software/alphabet/

We are pleased to introduce the new service Foldify, which is now fully integrated into the Kubernetes environment. Foldify is a cutting-edge platform designed for protein folding in 3D space, known for its easy and user-friendly interface. This service significantly simplifies and streamlines the work of professionals in biochemistry and biophysics. It offers users a wide range of data processing options, as it supports not only the popular AlphaFold but also tools such as ColabFold, OmegaFold, and ESMFOLD.

You can discover and utilize the Foldify service at the following address: https://foldify.cloud.e-infra.cz/

Wishing you a peaceful Christmas and all the best in the New Year,

Your MetaCentrum

The MetaCenter has been recently expanded with two new powerful clusters:

1) Masaryk University (CERIT-SC) added 20 additional nodes with a total of 960 CPU cores and 32x NVIDIA H100 with 94 GB of GPU RAM suitable for AI-intensive computing.

2) The Institute of Physics of the Academy of Sciences added a new cluster magma.fzu.cz consisting of 23 nodes with 2208 CPU cores and 1.5 TB RAM each

1) Cluster bee.cerit-sc.cz

There are 10 nodes involved in the MetaCenter batch system, with a total of 960 CPU cores and 20x NVIDIA H100, with the following configuration of each node:

| CPU | 2x AMD EPYC 9454 48-Core Processor |

|---|---|

| RAM | 1536 GiB |

| GPU | 2x H100 s 94 GB GPU RAM |

| disk | 8x 7TB SSD with BeeGFS support |

| net | Ethernet 100Gbit/s, InfiniBand 200Gbit/s |

| note |

Performance of each node is according to SPECrate 2017_fp_base = 1060 |

| owner | CERIT-SC |

The cluster supports NVidia GPU Cloud (NGC) tools for deep learning, including pre-configured environments, and is accessible in regular gpu queues.

We are also preparing a change in access the DGX H100 machine, which will remain in a dedicated queue gpu_dgx@meta-pbs.metacentrum.cz. It will be usable on demand and only by users who can prove that their jobs support NVLink and are able to use at least 4 or all 8 GPU cards at once. We will keep you posted on the upcoming change.

2) Cluster magma.fzu.cz

There are new 23 nodes involved in the MetaCenter batch system, with a total of 2208 CPU cores with the following configuration for each node:

| CPU | 2x AMD EPYC 9454 48-Core Processor CPU @ 2.7GHz |

|---|---|

| RAM | 1536 GiBidia |

| disk | 1x 3.84 NVMe |

| net | Ethernet 10Gbit/s |

| note |

The performance of each node is according to SPECrate 2017_fp_base = 1160 |

| owner | FZÚ AV ČR |

The cluster is accessible in the priority queue of the owner luna@pbs-m1.metacentrum.cz and for other users in short regular queues.

Complete list of the available HW: http://metavo.metacentrum.cz/pbsmon2/hardware.

Vážení uživatelé,

dovolujeme si přeposlat informaci o grantové soutěži v IT4I:

|

|||||||||

|

Dear users,

At the beginning of March we first announced the launch of the migration to the new PBSPro -> OpenPBS.

Please use the new OpenPBS environment pbs-m1.metacentrum.cz for your tasks. If you don't want to change anything in your scripts, submit jobs from frontends with Debian12 OS, the queue names will remain the same, only the PBS server (QUEUE_NAME@pbs-m1.metacentrum.cz) will change.

The list of available frontends including the current OS can be found at https://docs.metacentrum.cz/computing/frontends/

About 3/4 of the clusters are now available in the new OpenPBS environment, we are working hard to reinstall the others. We are waiting for the jobs to run out.

Overview of machines with Debian12 feature: https://metavo.metacentrum.cz/pbsmon2/props?property=os%3Ddebian12

You can test whether your job will run in the new OpenPBS environment in the qsub builder: https://metavo.metacentrum.cz/pbsmon2/qsub_pbspro

For up-to-date information on the migration, see the documentation at https://docs.metacentrum.cz/tutorials/debian-12/ (we will update the migration procedure here).

Your MetaCenter

Dear users,

We have made a change to the Open OnDemand (OOD) service that allows OOD jobs to be started on clusters that do not have a default home on the brno2 storage. Due to this change, the existing data, command history, etc., stored on brno2 will not be available in new OOD jobs if they are run on a machine with a different home directory.

To access the original data from brno2 storage, you must create a symbolic link to the new storage. The example below demonstrates setting up a symbolic link for the R program's history.

ln -s /storage/brno2/home/user_name/.Rhistory /storage/new_location/home/user_name/.Rhistory

Yours MetaCenter

e-INFRA CZ Conference 2024, which tooke place on 29-30 April 2024 in Prague at the Occindental Hotel, visited 180 guests.

Presentations are available at the event page at https://www.e-infra.cz/konference-e-infra-cz

A video recording from the whole event will be available soon.

At the beginning of March we announced the start of the migration to the new PBSPro -> OpenPBS.

If this has not already happened, please use the new OpenPBS environment pbs-m1.metacentrum.cz for your jobs. If you don't want to change anything in your scripts, submit jobs temporarily from the new zenith frontend or from the reinstalled nympha, tilia and perian frontends running in the new OpenPBS environment (already with Debian12 OS). The other frontends will be migrated gradually.

For a list of available frontends, including the current OS, see https://docs.metacentrum.cz/computing/frontends/

The new OpenPBS can also be accessed from other frontends; the openpbs module (module add openpbs) must be activated in such case.

Problems with compatibility of some applications with Debian12 OS are continuously solved by recompiling new software modules. If you encounter a problem with your application, try adding the debian11/compat module to the beginning of your startup script (module add debian11/compat). If problems persist (missing libraries, etc.), let us know at meta(at)cesnet.cz.

About half of the clusters are now available in the new OpenPBS environment, and we are working hard to reinstall the others. We are waiting for the jobs to run out. Overview of machines with Debian12 feature: https://metavo.metacentrum.cz/pbsmon2/props?property=os%3Ddebian12

You can test whether your job will run in the new OpenPBS environment in the qsub builder: https://metavo.metacentrum.cz/pbsmon2/qsub_pbspro

For up-to-date information on the migration, see the documentation at https://docs.metacentrum.cz/tutorials/debian-12/ (we will update the migration procedure here).

Dear users,

We would like to invite you to participate in thee-INFRA CZ Conference 2024, which will take place on 29-30 April 2024 in Prague at the Occindental Hotel.

At the conference we will present e-INFRA CZ infrastructure, its services, international projects and research activities. We will introduce you to the latest news and outline the plans of the MetaCentre. The second day of the conference will bring concrete advice and examples of how to use the infrastructure.

The conference will be held in English.

For more information, agenda and registration, visit the event page at https://www.e-infra.cz/konference-e-infra-cz

We look forward to seeing you,

Yours MetaCenter

Dear users,

we are forwarding an invitation to Open day for the launch of the OSCARS Open Call for Open Science Projects

|

|

Best regards,

--------------------------------------

We are preparing the transition to the new PBS - OpenPBS. Existing PBSPro servers will be decommissioned in the future because they cannot communicate directly with the new OpenPBS servers and utilities. At the same time as the migration to the new PBS we are upgrading the OS: Debian11 -> Debian12.

For testing purposes we have prepared a new OpenPBS environment pbs-m1.metacentrum.cz with new frontend zenith running on Debian12 OS:

- new frontend zenith.cerit-sc.cz (aka zenith.metacentrum.cz) running Debian12 OS

- new OpenPBS server pbs-m1.metacentrum.cz

- home /storage/brno12-cerit/

Gradually the new environment will be added to other clusters.

Overview of machines running Debian12: https://metavo.metacentrum.cz/pbsmon2/props?property=os%3Ddebian12

List of available frontends including the current OS: https://docs.metacentrum.cz/computing/frontends/

The new PBS can also be accessed from other frontends, but the openpbs module (module add openpbs) must be activated.

We are continuously solving compatibility problems of some applications with Debian12 OS by recompiling new software modules. If you encounter a problem with your application, try adding the debian11/compat module to the beginning of the startup script (module add debian11/compat). If problems persist (missing libraries, etc.), let us know at meta(at)cesnet.cz.

For more information, see the documentation at https://docs.metacentrum.cz/tutorials/debian-12/ (we will specify the migration procedure here).

We would like to remind you of the opportunity to share with us your experience with computing services of the large research infrastructure e-INFRA CZ, which consists of e-infrastructures CESNET, CERIT-SC and IT4Innovations. Please complete the questionnaire by 8 March 2024. Your answers will help us to adjust our services to better suit you.

If you have already completed the questionnaire, thank you for doing so! We greatly appreciate it.

The questionnaire is available at https://survey.e-infra.cz/compute

Matlab

We have acquired a new academic license for 200 instances of Matlab 9.14 and later (including a wide range of toolboxes), covering the computing environments of MetaCenter, CERIT-SC and IT4Innovations.

The new license comes with stricter conditions compared to the previous version. Please be aware that it is exclusively valid for use from MetaCenter/IT4Innovations IP addresses. Consequently, it cannot be utilized for running Matlab on personal computers or within university lecture rooms.

More information: https://docs.metacentrum.cz/software/sw-list/matlab/

Mathematica

Starting this year, MetaCentrum no longer holds a grid license for the general use of SW Mathematica (the supplier was unable to offer a suitable licensing model).

Currently, Mathematica 9 licenses are restricted to members of UK (Charles University) and JČU (University of South Bohemia) who have their own licenses for students and employees.

If you have your own (institutional) Mathematica software license, please contact us for more information at meta@cesnet.cz.

More information: https://docs.metacentrum.cz/software/sw-list/wolfram-math/

Chipster

MetaCenter has recently made its own instance of the Chipster tool available to users athttps://chipster.metacentrum.cz/.

Chipster is an open-source tool for analyzing genomic data. Its main purpose is to enable researchers and bioinformatics experts to perform advanced analyses on genomic data, including sequencing data, microarrays, and RNA-seq:

More information: https://docs.metacentrum.cz/related/chipster/

Galaxy for MetaCenter users

Galaxy is an open web platform designed for FAIR data analysis. Originally focused on biomedical research, it now covers various scientific domains. For MetaCentrum users, we have prepared two Galaxy environments for general use:

a) usegalaxy.cz

General portal at https://usegalaxy.cz/ mirrors the functionality (especially the set of available tools) of global services (usegalaxy.org, usegalaxy.eu). Additionally, it offers significantly higher user quotas (both computational and storage) for registered MetaCentrum users. Key features:

More information: https://docs.metacentrum.cz/related/galaxy/

b) RepeatExplorer Galaxy

In addition to the general-purpose Galaxy, we offer our users a dedicated Galaxy instance with the Repeat Explorer tool. You need to register for the service.

RepeatExplorer is a powerful data processing tool that is based on the Galaxy platform. Its main purpose is to characterize repetitive sequences in data obtained from sequencing. Key features:

More information: https://galaxy-elixir.cerit-sc.cz/

OnDemand

Open OnDemand https://ondemand.grid.cesnet.cz/ is a service that allows users to access computational resources through a web browser in graphical mode. The user can run common PBS jobs, access frontend terminals, copy files between repositories, or run multiple graphical applications directly in the browser.

Some of the features of Open OnDemand include:

More information: https://docs.metacentrum.cz/software/ondemand/

Kubernetes/Rancher

A number of graphical applications are also available in Kubernetes/Rancher https://rancher.cloud.e-infra.cz/dashboard/ under the management of CERIT-SC (Ansys, Remote Desktop, Matlab, RStudio, ...)

More information: https://docs.cerit.io/

JupyterNotebooks

Jupyter Notebooks is an "as a Service" environment based on Jupyter technology. It is a tool that is accessible via a web browser and allows users to combine code (mainly in Python), using Markdown output, text, math, calculations and rich media content.

MetaCenter users can use Jupyter Notebooks in three flavors:

a) CERIT-SC offers access to AlphaFold as a Service in a web browser (as a pre-built Jupyter Notebook).

More information: https://docs.cerit.io/docs/alphafold.html

b) in batch jobs in v OnDemand https://ondemand.grid.cesnet.cz/pun/sys/myjobs/workflows/new

c) in batch jobs using RemoteDesktop and pre-made containers for Singularity

More information: https://docs.metacentrum.cz/software/sw-list/alphafold/

The archive repository du4.cesnet.cz at MetaCenter connected as storage-du-cesnet.metacentrum.cz is out of warranty and is experiencing a number of technical problems in the tape library mechanics, which does not compromise the stored data itself, but complicates its availability. Colleagues at CESNET Data Storage are preparing to migrate the existing data to a new system (Object Storage).

We now need to dampen the traffic on this repository as much as possible, please

If you need the data stored here for calculations, please arrange a priority migration with our colleagues at du-support@cesnet.cz

If, on the other hand, you have data stored here that you no longer plan to use or move (for example, old backups), please also contact colleagues at du-support@cesnet.cz.

Dear users,

we are forwarding an invitation with courses of SVS FEM (Ansys).

|

|

SVS FEM s.r.o., Trnkova 3104/117c, 628 00 Brno

+420 543 254 554 | http://www.svsfem.cz

Best regards,

Due to failure and age, we have recently decommissioned or plan to decommission the oldest CERIT-SC disk arrays in the near future:

We recently decommissioned the /storage/brno3-cerit/ disk array and moved the data from the /home directories to /storage/brno12-cerit/home/LOGIN/brno3/ (alternatively directly to /home if it was empty on the new repository).

The symlink /storage/brno3-cerit/home/LOGIN/... , which leads to the same data on the new array, remained temporarily functional. From now on, please use the new path to the same data /storage/brno12-cerit/home/LOGIN/...

All data from brno3 is already physically moved to the new field! No need to copy anything anywhere.

In the near future we will start moving data from the /storage/brno1-cerit/ disk array to /storage/brno12-cerit/home/LOGIN/brno1/.

We will move the data at a time when it will not be used in jobs.

Temporarily, the symlink /storage/brno1-cerit/home/LOGIN/... will remain functional, leading to the same data in the new array. This will be deleted when the field is deleted and the data will be available as /storage/brno12-cerit/home/LOGIN/brno1/.

ATTENTION: Please note that the /storage/brno1-cerit/ disk array also contains data from archives of old, long-deleted disk arrays. We do not have plans to transfer data from archives automatically. If you require data from the following archives, please contact us at meta@cesnet.cz, and we will copy the necessary data to /storage/brno12-cerit/:

The disk array /storage/brno12-cerit/ (storage-brno12-cerit.metacentrum.cz) will be the only one connected to MetaCenter from CERIT-SC.

You will find all your data on the /storage/brno12-cerit/home/LOGIN/... disk array, and the symlinks to the old storage will be removed by summer at the latest.

We apologize for any inconvenience and wish you a pleasant day.

Sincerely, MetaCenter.

Dear users,

we are forwarding an invitation with courses in IT4Innovation.

|

||||||||||||

|

||||||||||||

|

Best regards,

Researchers from the Department of Cybernetics at the FAV ZČU, who presented at the MetaCenter Grid Workshop in the spring, and with whom we recently did a report on the use of our services, have won the AI Awards 2023. Congratulations!

Our services, in particular the Kubernetes cluster Kubus and its associated disk storage, are also behind the award-winning project of preserving historical heritage and cultural memory by providing access to the NKVD/KGB archive of historical documents.

MetaCentre manages these computing and data resources to solve very demanding tasks in the field of science and research. For more information, see the ZČU press release.

Our colleague Zdeněk Šustr is speaking today at the Copernicus forum and Inspirujme se 2023 conference at the Brno Observatory and Planetarium. He will present new services, data and plans for the Sentinel CollGS national node and the GREAT project. The conference is part of the Czech Space Week event and focuses on remote sensing and INSPIRE infrastructure for spatial data sharing.

The GREAT project is funded by the European Union, Digital Europe Programme (DIGITAL - ID: 101083927).

Dear users,

we are forwarding an invitation with courses in IT4Innovation.

|

|

|

|

|

|

|

S přáním příjemného počítání,

Dear users,

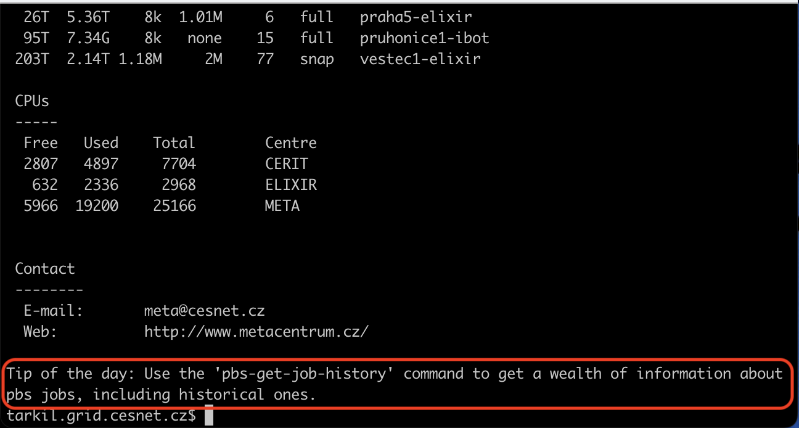

Based on the feedback we received from you in the user questionnaire at the turn of the year, we have compiled the most frequent questions into a Tip of the Day.

You will now see a random tip in the form of a short text at the end of the MOTD listing on the frontends when you log in.

You can disable viewing of tips on the selected frontend by using the "touch ~/.hushmotd" command.

With best wishes for a pleasant computing experience,

MetaCentrum

Dear users,

we are pleased to announce that we have acquired some very interesting new HW for MetaCenter.

For more information, please also see the press release e-INFRA CZ "Researchers in the Czech Republic get the most advanced AI system and two new clusters for demanding technical calculations"

Masaryk University (CERIT-SC) has become a pioneer in supporting artificial intelligence (AI) and high-performance computing technology with the installation of the latest and most advanced NVIDIA DGX H100 system. This is the first facility of its kind in the entire country (and Europe), bringing extreme computing power and innovative research capabilities.

Featuring the latest NVIDIA Hopper GPU architecture, the DGX H100 features eight advanced NVIDIA H100 Tensor Core GPUs, each with 80GB of GPU memory. This enables parallel processing of huge data volumes and dramatically accelerates computing tasks.

NVIDIA DGX H100 capy.cerit-sc.cz system configuration:

The DGX H100 server comes with a pre-installed software package NVIDIA DGX, which includes a comprehensive set of software tools for deep learning tools, including pre-configured environments.

The machine is available on-demand in a dedicated queue at gpu_dgx@meta-pbs.metacentrum.cz.

To request access, contact meta@cesnet.cz. In your request, describe the reasons for allocating this resource (need and ability to use it effectively). At the same time, briefly describe the expected results, the expected volume of resources and the time scale of the approach needed.

In addition, MetaCenter users can start using two brand new computing clusters acquired by CESNET. The first one has been launched at the Institute of Molecular Genetics of the Academy of Sciences of the Czech Republic in Prague under the name TURIN and the second one at the Institute of Computer Science of Masaryk University in Brno under the name TYRA.

The Prague TURIN cluster has 52 nodes, each with 64 CPU cores and 512 GB of RAM. Its Brno colleague TYRA is composed of 44 nodes and otherwise with identical technical specifications.

Both clusters are equipped with AMD processors along with AMD 3D V-Cache technology. These are the most powerful server processors designed for demanding calculations.

Cluster configurations turin.metacentrum.cz and tyra.metacentrum.cz

A complete list of currently available computing servers is available at https://metavo.metacentrum.cz/pbsmon2/hardware.

With best wishes for a pleasant computing experience,

MetaCentrum

Dear users,

Masaryk University (CERIT-SC) has become a pioneer in the field of artificial Intelligence (AI) and powerful computing technology by installing latest and most advanced NVIDIA DGX H100 system. This is the first facility of its kind in the entire country that delivers extreme computing power and innovative research capabilities.

Thanks to the latest NVIDIA Hopper DGX H100 GPU architecture, it features eight advanced NVIDIA H100 Tensor Core GPUs, each with a GPU 80GB of memory with a total computing power of 32 TeraFLOPS. This enables parallel processing of huge data volumes and significantly accelerates computing tasks. Thanks to the high-performance memory subsystems in the graphics accelerators, it provides fast data access and optimizes performance when working with large data sets. Users can achieve unparalleled efficiency and responsiveness in their AI tasks.

The DGX H100 server comes with a pre-installed software package NVIDIA DGX, which includes a comprehensive set of software tools for deep learning tools, including pre-configured environments.

The machine is available on-demand in a dedicated queue at gpu_dgx@meta-pbs.metacentrum.cz.

To request access, contact meta@cesnet.cz. In your request, describe the reasons for allocating this resource (need and ability to use it effectively). At the same time, briefly describe the expected results, the expected volume of resources and the time scale of the approach needed.

Kompletní seznam aktuálně dostupných výpočetních serverů je na http://metavo.metacentrum.cz/pbsmon2/hardware.

S přáním příjemného počítání,

Dear users,

I'm glad to announce you the MetaCentrum's computing capacity was extended with new clusters:

1) CPU cluster turin.metacentrum.cz, 52 nodes, 3328 CPU cores, in each node:

2) CPU cluster tyra.metacentrum.cz, 44 nodes, 2816 CPU cores, in each node::

Both clusters can be accessed via the conventional job submission through PBS batch system (@pbs-meta server) in short default queues. Longer queues will be added after testing.

For complete list of available HW in MetaCentrum see http://metavo.metacentrum.cz/pbsmon2/hardware

MetaCentrum

Dear users,

We have prepared new MetaCenter documentation for you, which is available at https://docs.metacentrum.cz/ .

We have structured the content according to the topics you are interested in, which you can find in the top bar. After clicking on the selected topic, the help menu on the left will appear with further navigation. On the right is the table of contents with the topics on the page.

We have included the feedback you sent us in the questionnaire into the documentation (thank you). For example, we cleaned up a lot of outdated information that remained traceable in the wiki and tried to make the tutorial examples clearer.

Because of the ability to trace back information, the original documentation will not be deleted immediately, but will remain temporarily accessible. However, it has not been updated since the end of March 2023!

Why did we choose a different documentation format and leave the wiki?

As you know, we are in the process of integrating our services into a single e-INFRA CZ* platform. Part of this integration is the unification of the format of all user documentation. In the future, we will integrate our new documentation into the common documentation of all services provided as part of e-INFRA CZ activities https://docs.e-infra.cz/.

-----

* e-INFRA CZ is an infrastructure for science and research that connects and coordinates the activities of three Czech e-infrastructures: the CESNET, CERIT-SC and IT4Innovations. More information can be found on the e-INFRA CZ homepage https://www.e-infra.cz/.

-----

The new documentation is still undergoing development and changes. In case you encounter any problems, uncertainties or miss something, please let us know at meta@cesnet.cz . We are already thinking how to make the section of the documentation dedicated to software installations even better for you.

Sincerely,

MetaCenter team

Dear users,

we would like to forward information about the grant competition:

|

||||

|

Dear users,

let us invite resend you the following invitation

--

Dear Madam / Sir,

The Czech National Competence Center in HPC is inviting you to a course Introduction to MPI, which will be held hybrid (online and onsite) on 30–31 May 2023.

Message Passing Interface (MPI) is a dominant programming model on clusters and distributed memory architectures. This course is focused on its basic concepts such as exchanging data by point-to-point and collective operations. Attendees will be able to immediately test and understand these constructs in hands-on sessions. After the course, attendees should be able to understand MPI applications and write their own code.

Introduction to MPI

Date: 30–31 May 2023, 9 am to 4 pm

Registration deadline: 23 May 2023

Venue: online via Zoom, onsite at IT4Innovations, Studentská 6231/1B, 708 00 Ostrava–Poruba, Czech Republic

Tutors: Ondřej Meca, Kristian Kadlubiak

Language: English

Web page: https://events.it4i.cz/event/165/

Please do not hesitate to contact us might you have any questions. Please write us at training@it4i.cz.

We are looking forward to meeting you online and onside.

Best regards,

Training Team IT4Innovations

training@it4i.cz

Dear users,

We would like to invite you to the traditional MetaCenter Seminar for all users, which will take place in Prague on 12th and 13th April 2023.

Together with EOSC CZ, we have prepared a rich program that may be of interest to you.

The first day of the event will be devoted to EOSC CZ activities, especially the preparation of a national repository platform and storage/archiving of research data in the Czech Republic.

The second day will be devoted to the Grid Computing 2023 Workshop, which will be focused on the presentation of the novelties and new services offered by MetaCentre.

These will include Singularity containers, NVIDIA framework for AI, Galaxy, graphical environments in OnDemand and Kubernetes, Jupyter Notebooks, Matlab (invited talk) and many more. In the afternoon, there will be an optional Hands-on workshop with limited capacity, where you can learn a lot of interesting things and try out the topics you are interested in under the guidance of our experts.

As we want the Workshop to meet your needs, we would be very happy if you could let us know which topics you are interested in and what you would like to try. We will try to include them in the program. Please send your suggestions to meta@cesnet.cz.

For more information about the event, please visit the seminar page: https://metavo.metacentrum.cz/cs/seminars/index.html

We look forward to your participation! The seminar will be held in Czech language. We will inform you about the opening of registration.

Yours MetaCentrum

Dear users,

We would like to inform you that starting from Thursday, March 9th, 2023, we are changing the method of calculating fairshare. We are adding a new coefficient called "spec", which takes into account the speed of the computing node on which your job is running.

Until now, "usage fairshare" was calculated as usage = used_walltime*PE , where "PE" represents processor equivalents expressing how many resources (ncpus, mem, scratch, gpu...) the user allocated on the machine.

From now on it will be calculated as usage = spec*used_walltime*PE , where "spec" denotes the standard specification of the main node (spec per cpu) on which job is running. This coefficient takes values from 3 to 10.

We hope that this change will allow you to use our computing resources even more efficiently. If you have any questions, please do not hesitate to contact us.

Dear users,

We have prepared a new version of the Open OnDemand graphical environment.

Open OnDemand https://ondemand.metacentrum.cz is a service that enables users to access computational resources via web browser in graphical mode.

User may start common PBS jobs, get access to frontend terminals, copy files between our storages or run several graphical applications in browser. Among the most used applications available are Matlab, ANSYS, MetaCentrum Remote Desktop and VMD (see full list of GUI applications available via OnDemand). The graphical sessions are persistent, you can access them from different computers in different times or even simultaneously.

The login and password to Open OnDemand V2 interface is your e-INFRA CZ / Metacentrum login and Metacentrum password.

More information can be found in the documentation on the wiki https://wiki.metacentrum.cz/wiki/OnDemand

Dear users,

let us invite resend you the following invitation

--

Dear Madam / Sir,

The Czech National Competence Center in HPC is inviting you to a course High Performance Data Analysis with R, which will be held hybrid (online and onsite) on 26–27 April 2023.

This course is focused on data analysis and modeling in R statistical programming language. The first day of the course will introduce how to approach a new dataset to understand the data and its features better. Modeling based on the modern set of packages jointly called TidyModels will be shown afterward. This set of packages strives to make the modeling in R as simple and as reproducible as possible.

The second day is focused on increasing computation efficiency by introducing Rcpp for seamless integration of C++ code into R code. A simple example of CUDA usage with Rcpp will be shown. In the afternoon, the section on parallelization of the code with future and/or MPI will be presented.

High Performance Data Analysis with R

Date: 26–27 April 2023, 9 am to 5 pm

Registration deadline: 20 April 2023

Venue: online via Zoom, onsite at IT4Innovations, Studentská 6231/1B, 708 00 Ostrava – Poruba, Czech Republic

Tutor: Tomáš Martinovič

Language: English

Web page: https://events.it4i.cz/event/163/

Please do not hesitate to contact us might you have any questions. Please write us at training@it4i.cz.

We are looking forward to meeting you online and onside.

Best regards,

Training Team NCC Czech Republic

training@it4i.cz

Dear users,

We would like to hear what you think about the services we are providing.

Please find approx. 15 minutes to complete the feedback form to provide us with the valuable information necessary to advance our services.

We understand that your time spent on this questionnaire is valuable and therefore everybody who completes the form and has a filled e-INFRA CZ login will receive a reward from us in the form of 0.5 impacted publication in the Grid service.

Feedback form (please choose any language option):

Thank you for your feedback. We wish you many successes and that everything is going well in 2023.

Your MetaCentrum

Dear users,

Due to the optimization of the NUMA system of the ursa server, the uv18.cerit-pbs.cerit-sc.cz queue has been introduced, which allows to allocate processors only in 18 subsets, so that the entire NUMA node is always used and there is no significant slowdown of the computation when unnecessarily allocating the task to multiple NUMA nodes.

The queue therefore accepts jobs in multiples of 18 CPU cores and has a high priority.

Best regards,

Your Metacentrum

Dear users,

it is now possible upon submission of computational job to define minimal CPU speed of the computing node, i.e. to make sure that the computing node the job will run on will have CPU of defined speed or faster. For this purpose a new PBS parameter spec is used. It's numerical value is obtained by methodology of https://www.spec.org/. To learn more about spec parameter usage, visit our wiki at https://wiki.metacentrum.cz/wiki/About_scheduling_system#CPU_speed.

Setting up requirement on CPU speed can make the job run faster, but it will on the other hand limit the number of machines the job has at it's disposal, which can result in longer queuing times. Please bear this in mind while using the spec parameter.

Best regards,

your Metacentrum

Dear Madam/Sir,

As part of the MetaCenter infrastructure security audit, we identified

several weak user passwords. To ensure sufficient protection

of the MetaCenter environment, the appropriate users will need to change

their password on the MetaCenter portal

(https://metavo.metacentrum.cz/cs/myaccount/heslo.html).

The concerned users will be contacted directly.

Should you have any questions, please contact mailto:support@metacentrum.cz

Yours,

MetaCentrum

We would like to inform users about several new features in the MetaCentrum & CERIT-SC infrastructures:

It is possible for users to access GUI applications simply through a web browser. For deatiled information see https://wiki.metacentrum.cz/wiki/Remote_desktop#Quick_start_I_-_Run_GUI_desktop_in_a_web_browser.

The access through VNC client (an older and more complicated way to get GUI) remains unchanged - see https://wiki.metacentrum.cz/wiki/Remote_desktop#Quick_start_II_-_Run_GUI_desktop_in_a_VNC_session and following tutorials.

As a new feature users can now fetch data from finished jobs, including those that finished more than 24 hours ago. For this, use command

pbs-get-job-history <job_id>

If the job is found in the archive, the command will create in current dir a new subdirectory called job_ID (e.g. 11808203.meta-pbs.metacentrum.cz) with several files. Namely, there will be

job_ID.SC - a copy of batch script as passed to qsub

job_ID.ER - standard output (STDOUT) of a job

job_ID.OU - standard error output (STDERR) of a job

For detailed information see https://wiki.metacentrum.cz/wiki/PBS_get_job_history

As a new feature users can now specify a minimum amount of memory the GPU card needs to have. For this there is a new PBS parameter gpu_mem. For example, the command

qsub -q gpu -l select=1:ncpus=2:ngpus=1:mem=10gb:scratch_local=10gb:gpu_mem=10gb -l walltime=24:0:0

makes sure that the GPU card on computational node will have at least 10 GB of memory.

For more information see https://wiki.metacentrum.cz/wiki/GPU_clusters.

We would also like to note that it is better to select GPU machine by specifying the gpu_mem and cuda_cap parameters than by specifying a particular cluster. The former way includes wider set of machines and therefore the shortens the queuing time of jobs.

Dear Madam/Sir,

We resend you the invitation for ESFRI Open Session

--

Dear All,

I am pleased to invite you to the 3rd ESFRI Open Session, with the leading theme Research Infrastructures and Big Data. The event will take place on June 30th 2022, from 13:00 until 14:30 CEST and will be fully virtual. The event will feature a short presentation from the Chair on recent ESFRI activities, followed by presentations from 6 Research infrastructures on the theme and there will also be an opportunity for discussion. The detailed agenda of the 3rd Open Session will soon be available via the event webpage.

ESFRI holds Open Sessions at its plenary meetings twice a year, to communicate to a wider audience about its activities. They are intended to serve both the ESFRI Delegates and representatives of the Research Infrastructures community, and facilitate both-ways exchange. ESFRI has launched the Open Session initiative as a part of the goals set within the ESFRI White Paper - Making Science Happen.

I would like to inform you that the Open Session will be recorded and will be at your disposal at our ESFRI YouTube channel. The recordings from the previous Open Sessions themed around the ESFRI RIs response to the COVID-19 pandemic, and the European Green Deal, are available here.

Please forward this invitation to your colleagues in the EU Research & Innovation ecosystem that you deem would benefit from the event.

Registration is mandatory for participation, and should be done via the following link:

https://us06web.zoom.us/webinar/register/WN_0-sM43ktT3mPuCzXi3KNdQ

Your attendance at the Open Session will be highly appreciated.

Sincerely,

Jana Kolar,

ESFRI Chair

Dear users,

We would like to invite you to attend the Grid Computing Seminar - MetaCentre 2022, which will take place on 10 May 2022 in Prague at the Diplomat Hotel.

The seminar is part of the e-Infrastructure Conference e-INFRA CZ 2022 https://www.e-infra.cz/konference-e-infra-cz and will be held in the Czech language.

We would like to introduce you to the e-INFRA CZ infrastructure, its services, international projects and research activities. We will introduce you to the latest news and outline our plans.

In the afternoon programme we will offer two parallel sessions. One will focus on network development, security and multimedia and the other on data processing and storage - MetaCentre Grid Computing Seminar 2022.

In the evening, interested parties can then attend a bonus session, Grid Service MetaCentrum - Best Practices, followed by a free discussion on topics that interest you and keep you awake.

For more information, agenda and registration, visit the event page at https://metavo.metacentrum.cz/cs/seminars/seminar2022/index.html

We look forward to seeing you,

Yours MetaCenter

Dear users,

I'm glad to announce you the MetaCentrum's computing capacity was extended with new clusters:

galdor.metacentrum.cz CESNET owner, 20 nodes, 1280 CPU cores aand 80x GPU NVIDIA A40, in each node:

The cluster can be accessed via the conventional job submission through PBS batch system (@pbs-meta server) in gpu priority and short default queues.

On GPU clusters, it is possible to use Docker images from NVIDIA GPU Cloud (NGC) - the most used environment for the development of machine learning and deep learning applications, HPC applications or visualization accelerated by NVIDIA GPU cards. Deploying these applications is then a matter of copying the link to the appropriate Docker image, running it in the Docker container in Singularity. More information can be found at https://wiki.metacentrum.cz/wiki/NVidia_deep_learning_frameworks

halmir.metacentrum.cz CESNET, 31 nodes, 1984 CPU cores, in each node:

The cluster can be accessed via the conventional job submission through PBS batch system (@pbs-meta server) in short default queues. Longer queues will be added after testing.

We continuously solve problems with the compatibility of some applications with the Debian11 OS by recompiling new SW modules. If you encounter a problem with your application, try adding the debian10-compat module at the beginning of the startup script. If the problems persist, let us know at meta (at) cesnet.cz.

For complete list of available HW in MetaCentrum see http://metavo.metacentrum.cz/pbsmon2/hardware

MetaCentrum

Dear users,

we invite you to the webinar Introduction of Kubernetes as another computing platform available to MetaCentrum users

Dear Madam/Sir,

Metacentrum proceeds to adapt new algorithms used to authenticate users and verify their passwords.

The new algorithms provide increased security and enable support of the latest devices and operating systems. In order to finish the transition, some users will be asked to visit the Metacentrum portal and renew their password in the application for password change (https://metavo.metacentrum.cz/en/myaccount/heslo.html).

The concerned users will be contacted directly.

We advise that we never ask our users to send their passwords in the mail. All information related to the management of users' passwords is available from the Metacentrum web portal.

Should you have any questions, please contact support@metacentrum.cz.

Yours,

MetaCentrum

Dear Madam/Sir,

We resend you the invitation for EGI webinar OpenRDA

--

Dear all

I'm please to announce the first webinar in the new year which is related to the current hot topic, Data Space. Register now to reserve your place!

Title: openRDM

Date and Time: Wednesday, 12th January 2022 |14:00 -15:00 PM CEST

Description: The talk will introduce OpenBIS, an Open Biology Information System, designed to facilitate robust data management for a wide variety of experiment types and research subjects. It allows tracking, annotating, and sharing of data throughout distributed research projects in different quantitative sciences.

Agenda: https://indico.egi.eu/event/5753/

Registration: us02web.zoom.us/webinar/register/WN_6xn2eqnjTI60-AtB6FKEEg

Speaker: Priyasma Bhoumik, Data Expert, ETH Zurich. Priyasma holds a PhD in Computational Sciences, from University of South Carolina, USA. She has worked as a Gates Fellow in Harvard Medical School to explore computational approaches to understanding the immune selection mechanism of HIV, for better vaccine strategy. She moved to Switzerland to join Novartis and has worked in the pharma industry in the field of data science before joining ETHZ.

If you missed any previous webinars, you can find recordings at our website: https://www.egi.eu/webinars/

Please let's know if there are any topics you are interested in, and we can arrange according to your requests.

Looking forward to seeing you on Wednesday!

Yin

----

Dr Yin Chen

Community Support Officer

EGI Foundation (Amsterdam, The Netherlands)

W: www.egi.eu | E: yin.chen@egi.eu | M: +31 (0)6 3037 3096 | Skype: yin.chen.egi | Twitter: @yinchen16

EGI: Advanced Computing for Research

The EGI Foundation is ISO 9001:2015 and ISO/IEC 20000-1:2011 certified

From now onwards it is possible to choose a new type of scratch, a SHM scratch. this scratch directory is intended for jobs needing speedy read/write operations. SHM scratch is held only in RAM, therefore all data are nonpersistent and disappear as the job ends or fails. You can read more about HSM scratches and theire usage on https://wiki.metacentrum.cz/wiki/Scratch_storage

With best regards,

MetaCentrum

We announce that the storages /storage/brno8 and /storage/ostrava1 will be shut down and decomissioned by 27th september 2021. Data stored in user homes will be moved to /storage/brno2/home/USERNAME/brno8 directory. The data transfer will be done by us and it requires no action on users' side. We nevertheless ask users to remove all data they do not want to keep and thus to help us to optimize the data transfer process.

Best regards,

MetaCentrum

Users are allowed to prolong their jobs in a limited number of cases.

To do this, use command qextend <full jobID> <additional_walltime>

For example:

(BUSTER)melounova@skirit:~$ qextend 8152779.meta-pbs.metacentrum.cz 01:00:00 The walltime of the job 8152779.meta-pbs.metacentrum.cz has been extended. Additional walltime: 01:00:00 New walltime: 02:00:00

To prevent abuse of the tool, there is a 30-day quota on how many times can the extend command be applied by a single user AND the total added time. Currently you can within the last 30 days

Job prolongations older than 30 days are "forgotten" and no longer occupy your quota.

More info can be foundi https://wiki.metacentrum.cz/wiki/Prolong_walltime

S přátelským pozdravem

MetaCentrum & CERIT-SC

Hello,

we announce that on August 15, 2021, the Hadoop-providing hador cluster will be decommissioned. The replacement is a virtualized cloud environment, including a suggested procedure to create a single-machine or multi-machine cluster variant.

For more information see https://wiki.metacentrum.cz/wiki/Hadoop_documentation

Best regards,

MetaCentrum

Due to the growing amount of data in our arrays, some disk operations are already disproportionately long. Problems are mainly caused by mass manipulations with data (copying of entire user directories, searching, backup, etc.). Complications are mainly caused by a large number of files.

We would like to ask you to check the number of files in your home directories and reduce it, if possible (zip, rar,..). The current quota status can be checked like the following:

The quota will be set to 1 - 2 million files per user. We plan to introduce quotas gradually in the coming months. We have alrerady started with new storages.

If you have enough space on your storage directories, you can keep the packed data there. However we encourage users to archive the data that are of permanent value, large and not accessed frequently. If you really need to keep large numbers of files in your home directory, contact us at user support e-mail meta@cesnet.cz

To reduce the number of files, please use access directly via /storage frontends, as described on our wiki in the section Working with data: https://wiki.metacentrum.cz/wiki/Working_with_data

Information about data backup or snapshoting is provided on the above-mentioned wiki page Working with data https://wiki.metacentrum.cz/wiki/Working_with_data , including recommendations how to handle different types of data.

To check the backup mode of individual disk arrays can be found

To increase the security of our users, we have decided to remove the possibility of writing to the root home directories by another users (ACL group and other), which contain sensitive files such as .k5login, .profile, etc. (to avoid manipulation with it).

Please be informed, from 1. 7. we start to automatically check the rights in root home user direstories, writing of other users (except the owner) will not be allowed. The ability to write to other subdirectories, typically due to data sharing within the group, remains.

More information can be found on our wiki pages in the section Data sharing in the group: https://wiki.metacentrum.cz/wiki/Sharing_data_in_group

MetaCentrum

MetaCentrum introduces two news as part of raising safety standards:

1) User access location monitoring. As a part of IT safety precautions, we introduced a new mechanism to prevent the abuse of stolen login data. From now on, the user's login location will be compared to previous point(s) of access. If a new location is found, the user will receive e-mail informing him/her about this fact and asking him/her to report to Metacentrum in case he/she did not do the login. The goal is to make it possible to detect unauthorized usage of user login data.

In case they suspect unauthorized use of their login data, we ask users to proceed according to instructions given in the e-mail.

2) Change in password encryption handling. Due to recent changes in Metacentrum safety infrastructure a new encryption method for users' password was adopted. To complete the process, it is necessary that users afflicted by the change renew their passwords. The password itself does not need to be changed, albeit we urge users to use reasonably strong one.

In the coming weeks we will send e-mail to the afflicted users asking them to undergo the password change. The password can be changed also at the link https://metavo.metacentrum.cz/en/myaccount/heslo.html.

Best regards,

MetaCentrum & CERIT-SC

Vážení uživatelé,

On April 21, 2021, the tenth MetaCenter Grid Counting Workshop 2021 was held online, as a part of the three-day CESNET e-Infrastructure conference Presentations from the entire conference are published on the http://www.cesnet.cz/konferenceCESNET conference page.

Presentations and video recordings from Grid Counting Seminar, including our hands-on part, are available on the MetaCentra Web site: https://metavo.metacentrum.cz/cs/seminars/seminar2021/index.html

We look forward to seeing you in near future again!

MetaCentrum & CERIT-SC

Dear MetaCentrum user,

CESNET e-infrastructure conference starts today!

Our Grid Compouting Seminar 2021 will take place tomorrow 21. 4.!

The conference runs from Tuesday 20 April to Thuersday 22 April. The mornig sections start at 9 AM and the afternoon at 1 PM.

Join the coference via ZOOM or Youtube

20.4.

21.4.

22.4.

YouTube link can be found in the program at http://www.cesnet.cz/konferenceCESNET.

Program of our MetaCenter Grid Computing Workshop: https://metavo.metacentrum.cz/cs/seminars/seminar2021/index.html. Presentations frm the seminar will be published here after the event.

We look forward to seeing you!

MetaCentrum & CERIT-SC

Dear MetaCentrum user,

we would like to invite you to the Grid computing workshop 2021

AGENDA:

In the first part of our seminar, there will be lectures on news in MetaCentrum, CERIT-SC and IT4Innovation. In addition, our national activities in the European Open Science Cloud will be presented and the experience of our cooperation with the ESA user community, specifically on the processing and storage of data from Sentinel satellites

In the afternoon part of the Grid Computing Seminar, there will be a practically focused Hands-on seminar, which consists of 6 separate tutorials on the topic of general advice, graphical environments, containers, AI support, JupyterNotebooks, MetaCloud user GUI, ...

The seminar is part of the three-day CESNET 2021 e-infrastructure Conference https://www.cesnet.cz/akce/konferencecesnet/, which takes place on 20-22 April 2021

REGISTRATION:

Registration is free. Before the event, you will receive the link to join the conference. The conference is in Czech.

Program and registration: https://metavo.metacentrum.cz/cs/seminars/seminar2021/index.html

With best regards

MetaCentrum & CERIT-SC.

Dear users,

If your work is related to computational analysis please fill the Czech Galaxy Community Questionnaire below. It is very short and all questions are optional:

We would like to map the interests of Czech scientific communities, some of which are already using Galaxy, e.g. the RepeatExplorer (https://repeatexplorer-elixir.cerit-sc.cz/) or our own MetaCentrum (https://galaxy.metacentrum.cz/) instance. We want to identify interests with high prevalence and focus our training and outreach efforts towards them.

Together with the community questionnaire we are also launching a Galaxy-Czech mailing list at

https://lists.galaxyproject.org/lists/galaxy-czech.lists.galaxyproject.org/

This low volume open list will be steered towards organizing and publicizing workshops across all Galaxies, nurturing community discussion, and connecting with other national or topical Galaxy communities. Please subscribe if you are interested in what is happening in the Galaxy community.

Best regards,

yours MetaCentrum

Dear users,

I'm glad to announce you the MetaCentrum's computing capacity was extended with new clusters (1328 jader CPU):

1) GPU cluster cha.natur.cuni.cz (location Praha, owner CUNI UK), 1 node, 32 CPU cores:

2) cluster mor.natur.cuni.cz (location Praha, owner UK), 4 nodes, 80 CPU cores, in each node:

3) cluster pcr.natur.cuni.cz (location Praha, owner UK), 16 nodes, 1024 CPU cores, in each node:

4) GPU cluster fau.natur.cuni.cz ((location Praha, owner UK), 3 nodes 192 cores, in each node:

The clusters can be accessed via the conventional job submission through PBS batch system (@pbs-meta server) in default short queues, queue "gpu" and owners' priority queue "cucam".

For complete list of available HW in MetaCentrum see http://metavo.metacentrum.cz/pbsmon2/hardware

MetaCentrum

Dear users,

I'm glad to announce you the MetaCentrum's computing capacity was extended with new clusters:

zia.cerit-sc.cz (location Brno, owner CERIT-SC), 5 nodes, 640 CPU cores, GPU card NVIDIA A100, in each node:

The cluster can be accessed via the conventional job submission through PBS batch system (@pbs-cerit server) in gpu priority and short default queues

The cluster is equipped with currently the most powerful graphics accelerators NVIDIA A100 Tensor Core GPU (https://www.nvidia.com/en-us/data-center/a100/). It delivers unprecedented acceleration at every scale to power the world’s highest-performing elastic data centers for AI, data analytics, and HPC.

The main advantages of the NVIDIA A100 include a specialized Tensor core for machine learning applications or large memory (40 GB per accelerator). It supports calculations using tensor cores with different accuracy, in addition to INT4, INT8, BF16, FP16, FP64, a new TF32 format has been added.

On CERIT-SC GPU clusters, it is possible to use Docker images from NVIDIA GPU Cloud (NGC) - the most used environment for the development of machine learning and deep learning applications, HPC applications or visualization accelerated by NVIDIA GPU cards. Deploying these applications is then a matter of copying the link to the appropriate Docker image, running it in the Docker container (in Podman, alternatively in Singularity). More information can be found at https://wiki.metacentrum.cz/wiki/NVidia_deep_learning_frameworks

For complete list of available HW in MetaCentrum see http://metavo.metacentrum.cz/pbsmon2/hardware

MetaCentrum

Dear Madam/Sir,

We invite you to a new EuroHPC ivent:

LUMI ROADSHOW

The EuroHPC LUMI supercomputer, currently under deployment in Kajaani, Finland, will be one of the world’s fastest computing systems with performance over 550 PFlop/s. The LUMI supercomputer is procured jointly by the EuroHPC Joint Undertaking and the LUMI consortium. IT4Innovations is one of the LUMI consortium members.

We are organizing a special event to introduce the LUMI supercomputer and to make the first early access call for pilot testing of this World’s unique infrastructure, which is exclusive to the consortium's member states.

Part of this event will also be introducing the Czech National Competence Center in HPC. IT4Innovations joined the EuroCC project which was kicked off by the EuroHPC JU in September and is now establishing the National Competence Center for HPC in the Czech Republic. It will help share knowledge and expertise in HPC and implement supporting activities of this field focused on industry, academia, and public administration.

Register now for this event which will take place online on February 17, 2021! This event will gather the main Czech stakeholders from the HPC community together!

The event will be held in English.

Event webpage: https://events.it4i.cz/e/LUMI_Roadshow

Dear users,

I'm glad to announce you the MetaCentrum's computing capacity was extended with new clusters:

1) cluster kirke.meta.czu.cz (location Plzeň, owner CESNET), 60 nodes, 3840 CPU cores, in each node:

The cluster can be accessed via the conventional job submission through PBS batch system (@pbs-meta server) in default queues

2) cluster elwe.hw.elixir-czech.cz (location Praha, owner ELIXIR-CZ), 20 nodes, 1280 CPU cores, in each node:

The cluster can be accessed via the conventional job submission through PBS batch system (@pbs-elixir server) in default queues, dedicated for ELIXIR-CZ users.

3) cluster eltu.hw.elixir-czech.cz (location Vestec, owner ELIXIR-CZ), 2 nodes, 192 CPU cores, in each node:

The cluster can be accessed via the conventional job submission through PBS batch system (@pbs-elixir server) in default queues, dedicated for ELIXIR-CZ users.

4) cluster samson.ueb.cas.cz (owner Ústav experimentální botaniky AV ČR, Olomouc), 1 node, 112 CPU cores, in each node:

The cluster can be accessed via the conventional job submission through PBS batch system (@pbs-cerit) in priority queses prio a ueb for owners, and in default short queues for other users.

For complete list of available HW in MetaCentrum see http://metavo.metacentrum.cz/pbsmon2/hardware

MetaCentrum

Dear users,

I'm glad to announce you the MetaCentrum's computing capacity was extended with new clusters:

gita.cerit-sc.cz (location Brno, owner CERIT-SC), 14+14 nodes, 892 CPU cores, GPU card NVIDIA 2080 TI in a half of nodes; in each node:

The cluster can be accessed via the conventional job submission through PBS batch system (@pbs-cerit server) in gpu priority and default queues

For complete list of available HW in MetaCentrum see http://metavo.metacentrum.cz/pbsmon2/hardware

MetaCentrum

All PBS servers will be upgraded to the new version in MetaCentrum / CERIT-SC this week.

The biggest changes will include enabling job killing notifications, which will be sent directly by the PBS (after killing job due to mem, cpu, or walltime violation). The new settings will not take effect until all compute nodes have been restarted.

See the documentation for more information:

https://wiki.metacentrum.cz/wiki/Beginners_guide#Forced_job_termination_by_PBS_server

The upgrade of Debian9 machines on Debian10 will be completed in both planning systems very soon (with the exception of old machines running Debian9 OS - already after the warranty -- which will be decommissioned soon). Machines with OS Centos are not affected by the upgrade.

This means that no computer with Debian9 will be available soon, please remove the os=debian9 request from your jobs, jobs with this request will not start.

Compatibility issues with some Debian10 applications (libraries missing) are continually resolved by recompiling new SW modules. If you encounter a problem with your application, try adding the debian9-compat module to the beginning of the submission script. If you experience any problem with libraries or applications compatibility, please, report it to meta@cesnet.cz.

List of nodes with OS Debian9/Debian10/Centos7 are available in the PBSMon application:

* https://metavo.metacentrum.cz/pbsmon2/props?property=os%3Ddebian9

* https://metavo.metacentrum.cz/pbsmon2/props?property=os%3Ddebian10

* https://metavo.metacentrum.cz/pbsmon2/props?property=os%3Dcentos7

List of frontends with actual OS: https://wiki.metacentrum.cz/wiki/Frontend

Note: Machines with other OSs (centos7) will continue to be available through special queues: urga, ungu (uv@wagap-pro queue) and phi (phi@ agap-pro queue)

Dear users,

to avoid unwanted activation of spam filters in case large number of PBS email notifications is sent in a short time, PBS notifications will be from now on aggregated in intervals of 30 minutes. This will be valid for notifications concerning the end or failure of computational job. Notifications informing about the beginning of the job will be sent in the same mode as before, i.e. immediately.

For more information see https://wiki.metacentrum.cz/wiki/Email_notifications

Dear users,

let us invite resend you the following invitation

--

We invite you to a new PRACE training course, organized by IT4Innovations National Supercomputing Center, with the title:

Parallel Visualization of Scientific Data using Blender

Basic information:

Date: Thu September 24, 2020, 9:30am - 4:30pm

Registration deadline: Wed September 16, 2020

Venue: IT4Innovations, Studentska 1b, Ostrava

Tutors: Petr Strakoš, Milan Jaroš, Alena Ješko (IT4Innovations)

Level: Beginners

Language: English

Main web page: https://events.prace-ri.eu/e/ParVis-09-2020

The course, an enriched rerun of a successful training from 2019, will focus on visualization of scientific data that can arise from simulations of different physical phenomena (e.g. fluid dynamics, structural analysis, etc.). To create visually pleasing outputs of such data, a path tracing rendering method will be used within the popular 3D creation suite Blender. We shall introduce two of our plug-ins we have developed: Covise Nodes and Bheappe. The first is used to extend Blender capabilities to process scientific data, while the latter integrates cluster rendering in Blender. Moreover, we shall demonstrate basics of Blender, present a data visualization example, and render a created scene on a supercomputer.

This training is a PRACE Training Centre course (PTC), co-funded by the Partnership of Advanced Computing in Europe (PRACE).

For more information and registration please visit

https://events.prace-ri.eu/e/ParVis-09-2020 or https://events.it4i.cz/e/ParVis-09-2020.

PLEASE NOTE: The organization of the course will be adapted to the current COVID-19 regulations and participants must comply with them. In case of the forced reduction of the number of participants, earlier registrations will be given priority.

We look forward to meeting you on the course.

Best regards,

Training Team IT4Innovations

training@it4i.cz

Dear user of MetaCentrum Cloud.

We would like to inform you of new service deployed in MetaCentrum Cloud. Load Balancer as a Service gives user an ability to create and manage load balancers, that can provide access to services hosted on

MetaCentrum Cloud.

Short description of service and link for documentation - https://cloud.gitlab-pages.ics.muni.cz/documentation/gui/#lbaas.

Kind regards

MetaCentrum Cloud team

cloud.metacentrum.cz

Let us inform you about the following operational news of the MetaCentrum & CERIT-SC infrastructures:

OpenOnDemand is a service that enables user to access CERIT-SC computational resources via the web browser in graphical mode. Among the most used applications available are Matlab, ANSYS and VMD. The login and password to Open OnDemand interface https://ondemand.cerit-sc.cz/ is your Metacentrum login and Metacentrum password.

Contact e-mail: support@cerit-sc.cz

https://wiki.metacentrum.cz/wiki/OnDemand

Nvidia deep learning frameworks can be run in Singularity (entire MetaCentrum) or Docker (Podman; CERIT-SC only)

https://wiki.metacentrum.cz/wiki/NVidia_deep_learning_frameworks

CVMFS (CernVM filesystem) is a filesystem developed in Cern to allow fast, scalable and reliable deployment of software on the distributed computing infrastructure. CVMFS is a read-only filesystem. Files and their metadata are transferred to user on demand with the use of aggressive memory caching. CVMFS software consists of client-side software for access to CVMFS repositories (similar to AFS volumes) and server-side tools for creating new repositories of CVMFS type.

https://wiki.metacentrum.cz/wiki/CVMFS

Dear users,

Let us inform you about a new service for research and development teams available.

It is provided by the IT4Innovations within the H2020 POP2 Center of Excellence project.

*Free parallel applications performance optimization assistance* is intended for both, the academic-scientific staff, and also for employees of companies that develop or

use parallel codes and tools and need professional help with the

optimization of their parallel codes for HPC systems.

If you are interested, do not hesitate to contact IT4I at info@it4i.cz

<mailto: info@it4i.cz>.

Regards,

Your IT4Innovations

Dear users,

let us invite you to three full day NVIDIA Deep Learning Institute certified training courses to learn more about Artificial Intelligence (AI) and High Performance Computing (HPC) development for NVIDIA GPUs.

The first half day is an introduction by IT4Innovations and M Computers about the latest state of the art NVIDIA technologies. We also explain our services offered for AI and HPC, for industrial and academic users. The introduction will include a tour though IT4Innovations‘ computing center, which hosts an NVIDIA DGX-2 system and the new Barbora cluster with V100 GPUs.

The first full day training course, Fundamentals of Deep Learning for Computer Vision, is provided by IT4Innovations and gives you an introduction to AI development for NVIDIA GPUs.

Two further HPC related full day courses, Fundamentals of Accelerated Computing with CUDA C/C++ and Fundamentals of Accelerated Computing with OpenACC, are delivered as PRACE training courses through the collaboration with the Leibniz Supercomputing Centre of the Bavarian Academy of Sciences (Germany).

We are pleased to be able to offer the course Fundamentals of Deep Learning for Computer Vision to industry free of charge, for the first time. Further courses for industry may be instigated upon request.

Academic users can participate free of charge for all three courses.

For more information visit http://nvidiaacademy.it4i.cz

After the successful upgrade of the PBS server in CERIT-SC, the other two PBS servers (arien-pro.ics.muni.cz and pbs.elixir-czech.cz) will be upgraded to a new version (with the newer incompatible Kerberos implementation), the transition starts on January 8, 2020. Therefore, we are preparing new PBS servers and existing PBS servers will be shut down after the jobs have finished:

Sorry for any inconvenience caused.

Yours MetaCentrumLet us inform you about the following operational news of the MetaCentrum & CERIT-SC infrastructures: